While many analysts and media outlets continue to express concern about automation taking jobs, some scientists are working to help robots work more closely with people. The Center for Robotics and Biosystems is conducting such interdisciplinary research at Northwestern University’s Technological Institute. The center expanded into a 12,000-sq.-ft. space late last year.

Evanston, Ill.-based Northwestern University has a long history with robotics. In the 1950s, Prof. Dick Hartenberg and Ph.D. student Jacques Denavit devised parameters to describe the kinematics of how robot links and joints move. In the mid-1990s, Professors Michael Peshkin and Ed Colgate devised the term cobots for collaborative robots.

“We realized that autonomy for robots wasn’t the right goal,” said Peshkin. “Instead, we could combine the strengths of people in intelligence, perception, and dexterity with the strengths of robots in persistence, accuracy, and interface to computer systems.”

Multidisciplinary effort

Faculty at the Center for Robotics and Biosystems come from departments as diverse as mechanical engineering, biomechanical engineering, computer science, electrical and computer engineering, the Feinberg School of Medicine, and the Shirley Ryan AbilityLab. They work on sensors, bio-inspired systems, neuroprostheses, and exoskeletons, among other technologies.

“In 2014, we started with an MS in robotics and bioscience,” said Kevin Lynch, director of the center. “We saw an increase in demand, and while our Ph.D. program is focused on research, it was clear that there’s a need for well-trained engineers who can make an impact now.”

“Northwestern is unique among universities because we have the Shirley Ryan AbilityLab, formerly the Rehabilitation Institute of Chicago,” said Lynch, who is also chair of Northwestern’s Department of Mechanical Engineering and editor-in-chief of IEEE Transactions on Robotics. “It’s the No. 1 rehabilitation institute in the country, and we have access to patients who have suffered strokes or spinal injuries. We can get assistive devices to them more quickly.”

“People are eager to participate in experiments, and if you’re going to design a human-robot system, you have to study the human,” he told The Robot Report. “You must program the robot to adapt to the human.”

The center has 10 core faculty members, plus another 14 or so from across the university and the rehabilitation lab, as well as up to 100 graduate and undergraduate researchers, said Lynch.

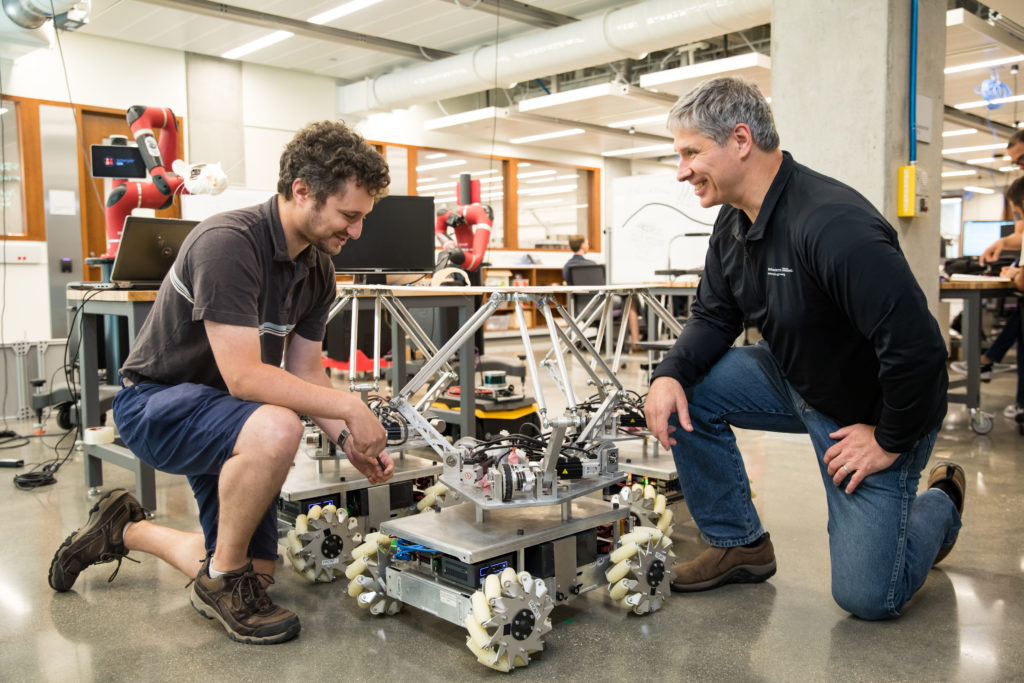

A custom mobile manipulator with Matt Elwin, assistant professor of instruction of mechanical engineering (left), and Kevin Lynch, director of the Center for Robotics and Biosystems. Source: Northwestern University

Cobots and collaboration at the Center for Robotics and Biosystems

While “cobots” usually refers to collaborative robot arms in industry, the Center for Robotics and Biosystems has a slightly different definition.

“My colleagues Ed Colgate and Michael Peshkin first said it was a robot mechanically designed to be safe to operate around humans,” Lynch recalled. “A lot of people are working on collaborative robots, but we’re talking about a robot that’s physically interacting with humans. For us, it means using visual, haptic, and audio interfaces to safely interact, like a prosthesis or a person physically lifting something with a robot.”

“This is different than a cobot arm or a mobile robot rolling up to someone,” he said. “Most of the research in our lab is TRL [Technology Readiness Level] 1 — it’s fairly basic research. We have some collaboration with companies, but we’re generally studying the principles of how robots and humans can exchange power safely and effectively for manipulation, rehabilitation, and prosthetics.”

At the same time, Northwestern University does have a strong history of commercialization, added Lynch, citing Stryker Corp.’s acquisition of surgical robotics company Mako, which was based on Northwestern research.

More recently, he said, Tanvas Inc. demonstrated haptic touchscreens using electroadhesion to convey a sense of texture at CES 2020.

Research and applications

“One project is working with mobile manipulators, allowing humans and robots to carry something across a cluttered workspace,” Lynch sad. “We’re working on safe control, compliance, and collaboration. While we have advanced warehouse applications in mind, we’re not working with a specific company on a specific application.”

“For example, putting up wallboard in construction is difficult to fully automate,” he added. “Humans bring intelligence, flexibility, and situational awareness to the task. How do we create an intuitive interface between humans and robots? Humans send cues to each other by sending or transferring forces through an object, learning control through manipulating the object.”

“This also applies to people and robots trying to maneuver in a crowded space,” said Lynch. “A human knows when it’s safe to get close to someone else or to nudge them, but a robot does not.”

Center for Robotics and Biosystems Director Kevin Lynch and a robot hand. Source: Northwestern University

Center for Robotics and Biosystems studies human-machine interaction

Brenna Argall, an associate professor of computer science, mechanical engineering, and physical medicine and rehabilitation, is among the researchers conducting experiments around human-machine interaction and interfaces at Northwestern and the Shirley Ryan AbilityLab, said Lynch.

“Our faculty is doing interesting work on intelligent wheelchairs, taking human performance into account” he said. “If someone with a physical disability needs help with locomotion, the wheelchair gets input, but that person may have a tremor. They’re trying to design ‘sliding autonomy,’ in which the wheelchair becomes an autonomous robot if the quality of the input is not good but slaved to user commands if it is.”

“A robotic wheelchair equipped with a camera can see the room, and the user driving the wheelchair might give input that drives it into the wall rather than an adjacent doorway,” said Lynch. “The robot can learn how well the user can operate on his or her own and adjust the signal.”

Prof. Brenna Argall is working on partially autonomous wheelchairs. Source: Northwestern University

Drones to provide situational awareness

Another example of the research at the Center for Robotics and Biosystems is work on single-motor drones. “Can you control he flight of a quadcopter with but one motor?” Lynch asked. Michael Rubenstein, assistant professor in electrical engineering, computer science, and mechanical engineering, is working on the innovative device, as well as drone swarms.

Drones are also the subject of research by Todd Murphey, professor of mechanical engineering at Northwestern, who is working on the use of drones and virtual reality to provide situational awareness in situations where vision is obscured or impaired. The Defense Advanced Research Projects Agency (DARPA) is interested in such human-machine coordination for soldiers in urban environments.

One of the challenges for the Center for Robotics and Biosystems is staying focused on the technology challenge, said Lynch. “We have to direct research to solving whatever problems, but additional goals could sidetrack you. Shorter-term goals are great for master’s students, but not five-year Ph.D.s.”

“Some of the government or corporate-funded research is very results-oriented, so it’s harder to have a long-term focus,” he added. “It’s nice to have an ecosystem where we have both longer-term research done by staff members and that done by students.”

Prof. Todd Murphey (right) is researching the use of robots to provide situational awareness. Source: Northwestern University

Embodied intelligence versus the cloud

Whether compute occurs on the edge or in the cloud depends on the devices, the amount and types of data to process and analyze, the environment, and the availability of secure networking, not to mention the application itself.

“Artificial intelligence is a hot topic,” Lynch acknowledged. “At the highest level, embodied intelligence has to have some physical connection to the world. It’s not just chatbots but AI that receives information from the world through sensors and acts on it.”

“Another meaning of embodied intelligence is in mechanical design,” he noted. “Humans and animals are good at walking not only because of neural control structures but also because our legs swing like pendulums, so designers need to think of such capabilities.”

“We’re not just evolving the hand or control strategies or vision — we’re doing them all at the same time,” said Lynch. “I bristle when I hear that deep learning will solve all our problems. It’s a strategy for mapping inputs to outputs, but you can’t put general intelligence on a random collection of motors and sensors.”

Rethinking education at the Center for Robotics and Biosystems

Not only is Northwestern’s Center for Robotics and Biosystems advancing the state of human-robot interaction, drone swarms, and embodied intelligence; it’s also changing robotics education.

“We teach a six-month specialization in Modern Robotics online in Coursera,” Lynch said. “Thousands of people around the world have signed up, and we use free textbook, plus a lightboard that Michael Peshkin developed in-house for video generation.”

“We also developed a new oscilloscope that you can carry with you,” he said. “Teaching in an electronics lab with $3,000 devices chained to a desk is often uninspiring, so we developed a portable tool for less than $100. Just as Arduino made microcontrollers available to everyone, nScope is democratizing and scaling electronics development.”